1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

| /root/miniconda3/envs/minicpmo/lib/python3.10/site-packages/_distutils_hack/__init__.py:53: UserWarning: Reliance on distutils from stdlib is deprecated. Users must rely on setuptools to provide the distutils module. Avoid importing distutils or import setuptools first, and avoid setting SETUPTOOLS_USE_DISTUTILS=stdlib. Register concerns at https://github.com/pypa/setuptools/issues/new?template=distutils-deprecation.yml

warnings.warn(

INFO 02-13 14:09:33 __init__.py:190] Automatically detected platform cuda.

INFO 02-13 14:09:34 api_server.py:840] vLLM API server version 0.7.2

INFO 02-13 14:09:34 api_server.py:841] args: Namespace(subparser='serve', model_tag='/mnt/workspace/MiniCPM-o-2_6', config='', host=None, port=8000, uvicorn_log_level='info', allow_credentials=False, allowed_origins=['*'], allowed_methods=['*'], allowed_headers=['*'], api_key='token-abc123', lora_modules=None, prompt_adapters=None, chat_template=None, chat_template_content_format='auto', response_role='assistant', ssl_keyfile=None, ssl_certfile=None, ssl_ca_certs=None, ssl_cert_reqs=0, root_path=None, middleware=[], return_tokens_as_token_ids=False, disable_frontend_multiprocessing=False, enable_request_id_headers=False, enable_auto_tool_choice=False, enable_reasoning=False, reasoning_parser=None, tool_call_parser=None, tool_parser_plugin='', model='/mnt/workspace/MiniCPM-o-2_6', task='auto', tokenizer=None, skip_tokenizer_init=False, revision=None, code_revision=None, tokenizer_revision=None, tokenizer_mode='auto', trust_remote_code=True, allowed_local_media_path=None, download_dir=None, load_format='auto', config_format=<ConfigFormat.AUTO: 'auto'>, dtype='auto', kv_cache_dtype='auto', max_model_len=4096, guided_decoding_backend='xgrammar', logits_processor_pattern=None, model_impl='auto', distributed_executor_backend=None, pipeline_parallel_size=1, tensor_parallel_size=1, max_parallel_loading_workers=None, ray_workers_use_nsight=False, block_size=None, enable_prefix_caching=None, disable_sliding_window=False, use_v2_block_manager=True, num_lookahead_slots=0, seed=0, swap_space=4, cpu_offload_gb=0, gpu_memory_utilization=1.0, num_gpu_blocks_override=None, max_num_batched_tokens=None, max_num_seqs=None, max_logprobs=20, disable_log_stats=False, quantization=None, rope_scaling=None, rope_theta=None, hf_overrides=None, enforce_eager=False, max_seq_len_to_capture=8192, disable_custom_all_reduce=False, tokenizer_pool_size=0, tokenizer_pool_type='ray', tokenizer_pool_extra_config=None, limit_mm_per_prompt=None, mm_processor_kwargs=None, disable_mm_preprocessor_cache=False, enable_lora=False, enable_lora_bias=False, max_loras=1, max_lora_rank=16, lora_extra_vocab_size=256, lora_dtype='auto', long_lora_scaling_factors=None, max_cpu_loras=None, fully_sharded_loras=False, enable_prompt_adapter=False, max_prompt_adapters=1, max_prompt_adapter_token=0, device='auto', num_scheduler_steps=1, multi_step_stream_outputs=True, scheduler_delay_factor=0.0, enable_chunked_prefill=None, speculative_model=None, speculative_model_quantization=None, num_speculative_tokens=None, speculative_disable_mqa_scorer=False, speculative_draft_tensor_parallel_size=None, speculative_max_model_len=None, speculative_disable_by_batch_size=None, ngram_prompt_lookup_max=None, ngram_prompt_lookup_min=None, spec_decoding_acceptance_method='rejection_sampler', typical_acceptance_sampler_posterior_threshold=None, typical_acceptance_sampler_posterior_alpha=None, disable_logprobs_during_spec_decoding=None, model_loader_extra_config=None, ignore_patterns=[], preemption_mode=None, served_model_name=None, qlora_adapter_name_or_path=None, otlp_traces_endpoint=None, collect_detailed_traces=None, disable_async_output_proc=False, scheduling_policy='fcfs', override_neuron_config=None, override_pooler_config=None, compilation_config=None, kv_transfer_config=None, worker_cls='auto', generation_config=None, override_generation_config=None, enable_sleep_mode=False, calculate_kv_scales=False, disable_log_requests=False, max_log_len=None, disable_fastapi_docs=False, enable_prompt_tokens_details=False, dispatch_function=<function serve at 0x7f7f59c5d090>)

INFO 02-13 14:09:34 api_server.py:206] Started engine process with PID 1581

/root/miniconda3/envs/minicpmo/lib/python3.10/site-packages/_distutils_hack/__init__.py:53: UserWarning: Reliance on distutils from stdlib is deprecated. Users must rely on setuptools to provide the distutils module. Avoid importing distutils or import setuptools first, and avoid setting SETUPTOOLS_USE_DISTUTILS=stdlib. Register concerns at https://github.com/pypa/setuptools/issues/new?template=distutils-deprecation.yml

warnings.warn(

INFO 02-13 14:09:37 __init__.py:190] Automatically detected platform cuda.

INFO 02-13 14:09:39 config.py:542] This model supports multiple tasks: {'generate', 'embed', 'score', 'classify', 'reward'}. Defaulting to 'generate'.

INFO 02-13 14:09:43 config.py:542] This model supports multiple tasks: {'reward', 'classify', 'embed', 'generate', 'score'}. Defaulting to 'generate'.

INFO 02-13 14:09:43 llm_engine.py:234] Initializing a V0 LLM engine (v0.7.2) with config: model='/mnt/workspace/MiniCPM-o-2_6', speculative_config=None, tokenizer='/mnt/workspace/MiniCPM-o-2_6', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, override_neuron_config=None, tokenizer_revision=None, trust_remote_code=True, dtype=torch.bfloat16, max_seq_len=4096, download_dir=None, load_format=auto, tensor_parallel_size=1, pipeline_parallel_size=1, disable_custom_all_reduce=False, quantization=None, enforce_eager=False, kv_cache_dtype=auto, device_config=cuda, decoding_config=DecodingConfig(guided_decoding_backend='xgrammar'), observability_config=ObservabilityConfig(otlp_traces_endpoint=None, collect_model_forward_time=False, collect_model_execute_time=False), seed=0, served_model_name=/mnt/workspace/MiniCPM-o-2_6, num_scheduler_steps=1, multi_step_stream_outputs=True, enable_prefix_caching=False, chunked_prefill_enabled=False, use_async_output_proc=True, disable_mm_preprocessor_cache=False, mm_processor_kwargs=None, pooler_config=None, compilation_config={"splitting_ops":[],"compile_sizes":[],"cudagraph_capture_sizes":[256,248,240,232,224,216,208,200,192,184,176,168,160,152,144,136,128,120,112,104,96,88,80,72,64,56,48,40,32,24,16,8,4,2,1],"max_capture_size":256}, use_cached_outputs=True,

INFO 02-13 14:09:44 cuda.py:230] Using Flash Attention backend.

INFO 02-13 14:09:44 model_runner.py:1110] Starting to load model /mnt/workspace/MiniCPM-o-2_6...

INFO 02-13 14:09:45 cuda.py:214] Cannot use FlashAttention-2 backend for head size 72.

INFO 02-13 14:09:45 cuda.py:227] Using XFormers backend.

Loading safetensors checkpoint shards: 0% Completed | 0/4 [00:00<?, ?it/s]

Loading safetensors checkpoint shards: 25% Completed | 1/4 [00:12<00:38, 12.95s/it]

Loading safetensors checkpoint shards: 50% Completed | 2/4 [00:31<00:32, 16.46s/it]

Loading safetensors checkpoint shards: 75% Completed | 3/4 [00:44<00:14, 14.92s/it]

Loading safetensors checkpoint shards: 100% Completed | 4/4 [00:55<00:00, 13.06s/it]

Loading safetensors checkpoint shards: 100% Completed | 4/4 [00:55<00:00, 13.79s/it]

INFO 02-13 14:10:41 model_runner.py:1115] Loading model weights took 15.7985 GB

/root/miniconda3/envs/minicpmo/lib/python3.10/site-packages/transformers/models/auto/image_processing_auto.py:590: FutureWarning: The image_processor_class argument is deprecated and will be removed in v4.42. Please use `slow_image_processor_class`, or `fast_image_processor_class` instead

warnings.warn(

Using a slow image processor as `use_fast` is unset and a slow processor was saved with this model. `use_fast=True` will be the default behavior in v4.48, even if the model was saved with a slow processor. This will result in minor differences in outputs. You'll still be able to use a slow processor with `use_fast=False`.

INFO 02-13 14:10:47 worker.py:267] Memory profiling takes 6.46 seconds

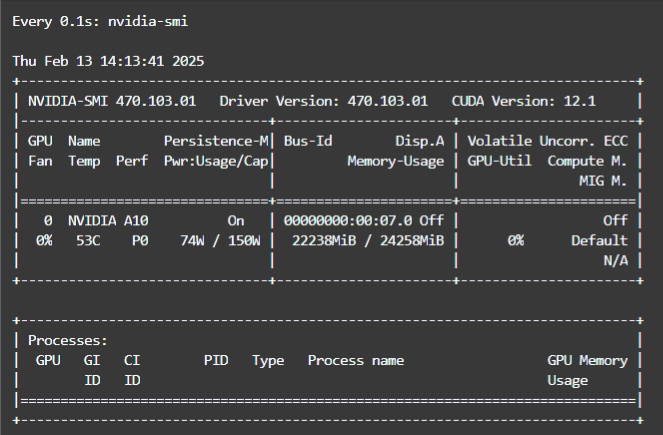

INFO 02-13 14:10:47 worker.py:267] the current vLLM instance can use total_gpu_memory (23.69GiB) x gpu_memory_utilization (1.00) = 23.69GiB

INFO 02-13 14:10:47 worker.py:267] model weights take 15.80GiB; non_torch_memory takes 0.06GiB; PyTorch activation peak memory takes 3.39GiB; the rest of the memory reserved for KV Cache is 4.45GiB.

INFO 02-13 14:10:47 executor_base.py:110] # CUDA blocks: 5207, # CPU blocks: 4681

INFO 02-13 14:10:47 executor_base.py:115] Maximum concurrency for 4096 tokens per request: 20.34x

INFO 02-13 14:10:50 model_runner.py:1434] Capturing cudagraphs for decoding. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI. If out-of-memory error occurs during cudagraph capture, consider decreasing `gpu_memory_utilization` or switching to eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage.

Capturing CUDA graph shapes: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 35/35 [00:14<00:00, 2.37it/s]

INFO 02-13 14:11:04 model_runner.py:1562] Graph capturing finished in 15 secs, took 0.75 GiB

INFO 02-13 14:11:04 llm_engine.py:431] init engine (profile, create kv cache, warmup model) took 23.74 seconds

INFO 02-13 14:11:04 api_server.py:756] Using supplied chat template:

INFO 02-13 14:11:04 api_server.py:756] None

INFO 02-13 14:11:04 launcher.py:21] Available routes are:

INFO 02-13 14:11:04 launcher.py:29] Route: /openapi.json, Methods: HEAD, GET

INFO 02-13 14:11:04 launcher.py:29] Route: /docs, Methods: HEAD, GET

INFO 02-13 14:11:04 launcher.py:29] Route: /docs/oauth2-redirect, Methods: HEAD, GET

INFO 02-13 14:11:04 launcher.py:29] Route: /redoc, Methods: HEAD, GET

INFO 02-13 14:11:04 launcher.py:29] Route: /health, Methods: GET

INFO 02-13 14:11:04 launcher.py:29] Route: /ping, Methods: GET, POST

INFO 02-13 14:11:04 launcher.py:29] Route: /tokenize, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /detokenize, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /v1/models, Methods: GET

INFO 02-13 14:11:04 launcher.py:29] Route: /version, Methods: GET

INFO 02-13 14:11:04 launcher.py:29] Route: /v1/chat/completions, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /v1/completions, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /v1/embeddings, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /pooling, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /score, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /v1/score, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /rerank, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /v1/rerank, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /v2/rerank, Methods: POST

INFO 02-13 14:11:04 launcher.py:29] Route: /invocations, Methods: POST

INFO: Started server process [1554]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

TXT

|